| Question.1 A company is building a web-based AI application by using Amazon SageMaker. The application will provide the following capabilities and features: ML experimentation, training, a central model registry, model deployment, and model monitoring. The application must ensure secure and isolated use of training data during the ML lifecycle. The training data is stored in Amazon S3. The company needs to use the central model registry to manage different versions of models in the application. Which action will meet this requirement with the LEAST operational overhead? (A) Create a separate Amazon Elastic Container Registry (Amazon ECR) repository for each model. (B) Use Amazon Elastic Container Registry (Amazon ECR) and unique tags for each model version. (C) Use the SageMaker Model Registry and model groups to catalog the models. (D) Use the SageMaker Model Registry and unique tags for each model version. |

1. Click here to View Answer

Answer: C

| Question.2 A company is building a web-based AI application by using Amazon SageMaker. The application will provide the following capabilities and features: ML experimentation, training, a central model registry, model deployment, and model monitoring. The application must ensure secure and isolated use of training data during the ML lifecycle. The training data is stored in Amazon S3. The company is experimenting with consecutive training jobs. How can the company MINIMIZE infrastructure startup times for these jobs? (A) Use Managed Spot Training. (B) Use SageMaker managed warm pools. (C) Use SageMaker Training Compiler. (D) Use the SageMaker distributed data parallelism (SMDDP) library. |

2. Click here to View Answer

Answer: B

| Question.3 A company is building a web-based AI application by using Amazon SageMaker. The application will provide the following capabilities and features: ML experimentation, training, a central model registry, model deployment, and model monitoring. The application must ensure secure and isolated use of training data during the ML lifecycle. The training data is stored in Amazon S3. The company must implement a manual approval-based workflow to ensure that only approved models can be deployed to production endpoints. Which solution will meet this requirement? (A) Use SageMaker Experiments to facilitate the approval process during model registration. (B) Use SageMaker ML Lineage Tracking on the central model registry. Create tracking entities for the approval process. (C) Use SageMaker Model Monitor to evaluate the performance of the model and to manage the approval. (D) Use SageMaker Pipelines. When a model version is registered, use the AWS SDK to change the approval status to “Approved.” |

3. Click here to View Answer

Answer : D

| Question.4 A company is building a web-based AI application by using Amazon SageMaker. The application will provide the following capabilities and features: ML experimentation, training, a central model registry, model deployment, and model monitoring. The application must ensure secure and isolated use of training data during the ML lifecycle. The training data is stored in Amazon S3. The company needs to run an on-demand workflow to monitor bias drift for models that are deployed to real-time endpoints from the application. Which action will meet this requirement? (A) Configure the application to invoke an AWS Lambda function that runs a SageMaker Clarify job. (B) Invoke an AWS Lambda function to pull the sagemaker-model-monitor-analyzer built-in SageMaker image. (C) Use AWS Glue Data Quality to monitor bias. (D) Use SageMaker notebooks to compare the bias. |

4. Click here to View Answer

Answer: A

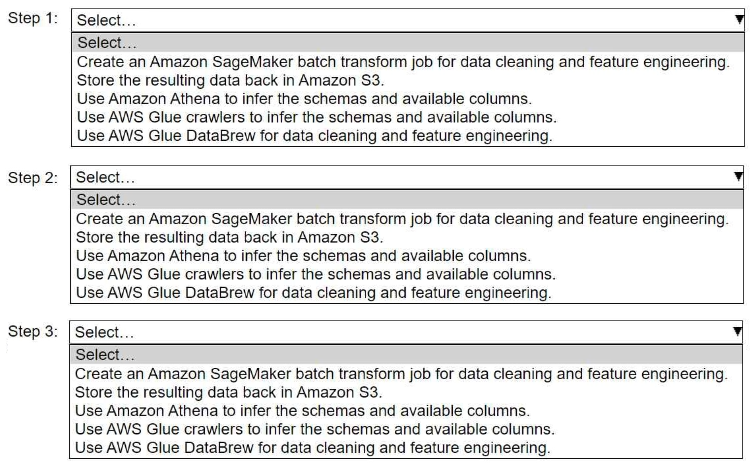

| Question.5 HOTSPOT – A company stores historical data in .csv files in Amazon S3. Only some of the rows and columns in the .csv files are populated. The columns are not labeled. An ML engineer needs to prepare and store the data so that the company can use the data to train ML models. Select and order the correct steps from the following list to perform this task. Each step should be selected one time or not at all. (Select and order three.) • Create an Amazon SageMaker batch transform job for data cleaning and feature engineering. • Store the resulting data back in Amazon S3. • Use Amazon Athena to infer the schemas and available columns. • Use AWS Glue crawlers to infer the schemas and available columns. • Use AWS Glue DataBrew for data cleaning and feature engineering.  |

5. Click here to View Answer

Answer:

Explanation:

Step 1: Use AWS Glue crawlers to infer the schemas and available columns.

AWS Glue Crawlers scan data in Amazon S3 to infer schema and structure, automatically creating metadata tables in the Glue Data Catalog.

This step is ideal as the first step in a data pipeline when you’re preparing raw data.

Automatically discovers schemas, data types, and partitions.

Step 2: Use AWS Glue DataBrew for data cleaning and feature engineering.

AWS Glue DataBrew is a visual data preparation tool that lets users clean and transform data without writing code.

After schema discovery, the next logical step is to transform and clean the data — removing nulls, standardizing formats, etc.

Data cleaning, transformation, feature engineering.

Preview transformations before applying them.

Step 3: Store the resulting data back in Amazon S3.

After cleaning and transforming the data with DataBrew (or any other tool), you typically store the refined dataset back to Amazon S3.

Amazon S3 acts as a central data lake for storage, sharing, or further processing (e.g., using Athena or SageMaker).