| Question.21 A company has a legacy application that runs on a single Amazon EC2 instance. A security audit shows that the application has been using an IAM access key within its code to access an Amazon S3 bucket that is named DOC-EXAMPLE-BUCKET1 in the same AWS account. This access key pair has the s3:GetObject permission to all objects in only this S3 bucket. The company takes the application offline because the application is not compliant with the company’s security policies for accessing other AWS resources from Amazon EC2. A security engineer validates that AWS CloudTrail is turned on in all AWS Regions. CloudTrail is sending logs to an S3 bucket that is named DOC-EXAMPLE-BUCKET2. This S3 bucket is in the same AWS account as DOC-EXAMPLE-BUCKET1. However, CloudTrail has not been configured to send logs to Amazon CloudWatch Logs. The company wants to know if any objects in DOC-EXAMPLE-BUCKET1 were accessed with the IAM access key in the past 60 days. If any objects were accessed, the company wants to know if any of the objects that are text files (.txt extension) contained personally identifiable information (PII). Which combination of steps should the security engineer take to gather this information? (Choose two.) (A) Use Amazon CloudWatch Logs Insights to identify any objects in DOC-EXAMPLE-BUCKET1 that contain PII and that were available to the access key. (B) Use Amazon OpenSearch Service to query the CloudTrail logs in DOC-EXAMPLE-BUCKET2 for API calls that used the access key to access an object that contained PII. (C) Use Amazon Athena to query the CloudTrail logs in DOC-EXAMPLE-BUCKET2 for any API calls that used the access key to access an object that contained PII. (D) Use AWS Identity and Access Management Access Analyzer to identify any API calls that used the access key to access objects that contained PII in DOC-EXAMPLE-BUCKET1. (E) Configure Amazon Macie to identify any objects in DOC-EXAMPLE-BUCKET1 that contain PII and that were available to the access key. |

21. Click here to View Answer

Answer: CE

Explanation:

Here’s a detailed justification for the answer CE:

The question requires identifying if a specific IAM access key was used to access objects in an S3 bucket (DOC-EXAMPLE-BUCKET1) within the last 60 days and whether any accessed .txt files contained PII.

Option C is incorrect because it incorrectly assumes the CloudTrail logs can directly identify if an object contains PII. CloudTrail captures API calls, not the content of objects. Option D is incorrect because IAM Access Analyzer focuses on identifying unintended access to resources and doesn’t analyze the content of accessed objects to detect PII. Option A is incorrect because CloudWatch Logs Insights works with CloudWatch Logs data, but CloudTrail is not configured to send logs to CloudWatch Logs, and CloudWatch Logs Insights does not have the ability to analyze the content of the object and find PII.

Option E, configuring Amazon Macie, is correct because Macie is a data security and data privacy service that uses machine learning and pattern matching to discover sensitive data, including PII, in S3 buckets. Macie can analyze the contents of objects in DOC-EXAMPLE-BUCKET1 to identify if any .txt files contain PII. It works independently of the access key used to access the objects, focusing solely on the data itself. This is specifically relevant as the company needs to know which objects contain PII, regardless of how they were accessed. https://aws.amazon.com/macie/

Option C, using Amazon Athena to query CloudTrail logs, is correct because CloudTrail logs contain records of API calls made to AWS services, including S3. By querying these logs, we can filter for API calls that used the specific IAM access key and accessed objects in DOC-EXAMPLE-BUCKET1 within the last 60 days. This confirms if the access key was indeed used to access objects in the specified bucket. https://aws.amazon.com/athena/ and https://docs.aws.amazon.com/awscloudtrail/latest/userguide/cloudtrail-concepts.html

Therefore, by combining the results of these two steps, the security engineer can determine if the access key was used to access objects in DOC-EXAMPLE-BUCKET1 and whether any accessed .txt files contain PII.

| Question.22 A security engineer creates an Amazon S3 bucket policy that denies access to all users. A few days later, the security engineer adds an additional statement to the bucket policy to allow read-only access to one other employee. Even after updating the policy, the employee sill receives an access denied message. What is the likely cause of this access denial? (A) The ACL in the bucket needs to be updated. (B) The IAM policy does not allow the user to access the bucket. (C) It takes a few minutes for a bucket policy to take effect. (D) The allow permission is being overridden by the deny. |

22. Click here to View Answer

Answer: D

Explanation:

Here’s a detailed justification for why option D is the most likely cause of the access denial, based on AWS security principles:

The core concept at play here is that, in AWS IAM and S3 bucket policies, explicit Deny statements always override Allow statements. This is a fundamental principle of how AWS authorization works. Even if an Allow statement grants a user permission to perform an action, if a Deny statement exists that applies to the same user and action, the Deny will take precedence.

In this scenario, the initial bucket policy explicitly denied all access to all users. Subsequently, an Allow statement was added to grant one employee read-only access. However, the original Deny statement is still in effect. Because it applies to all users, including the specified employee, the explicit Deny overrides the new Allow. Therefore, the employee continues to receive an access denied message.

Let’s examine why the other options are less likely:

- A. The ACL in the bucket needs to be updated: While Access Control Lists (ACLs) can control access to S3 buckets, bucket policies are generally the preferred method for managing S3 access permissions, offering more granular control. If the existing bucket policy is the source of the issue, manipulating ACLs is unlikely to solve it directly, and could add further complexity. Also, ACLs have limited capabilities.

- B. The IAM policy does not allow the user to access the bucket: IAM policies apply to the user themselves. Bucket policies apply to the bucket. So, the user’s IAM policy would generally allow for the user to perform certain actions. However, this problem exists because of a bucket policy, so it cannot be a problem with the IAM policy.

- C. It takes a few minutes for a bucket policy to take effect: AWS services generally apply policy changes very quickly, with propagation usually occurring within seconds. A delay of days would be highly unusual and indicative of a more fundamental problem with the policy itself, rather than a simple propagation delay.

In summary, the most likely cause of the access denial is the existing Deny statement in the S3 bucket policy overriding the newly added Allow statement. To resolve this, the security engineer needs to modify the original Deny statement to exclude the employee from its scope, ensuring that the Allow statement can take effect. This could involve adding a Condition block to the Deny statement that specifies the user should be exempt from the Deny, or removing the original Deny statement entirely, and rewriting the permissions.

Authoritative Links for further research:

S3 Bucket Policies: https://docs.aws.amazon.com/AmazonS3/latest/userguide/bucket-policies.html

AWS IAM Evaluation Logic: https://docs.aws.amazon.com/IAM/latest/UserGuide/reference_policies_evaluation_logic.html

| Question.23 A company is using Amazon Macie, AWS Firewall Manager, Amazon Inspector, and AWS Shield Advanced in its AWS account. The company wants to receive alerts if a DDoS attack occurs against the account. Which solution will meet this requirement? (A) Use Macie to detect an active DDoS event. Create Amazon CloudWatch alarms that respond to Macie findings. (B) Use Amazon inspector to review resources and to invoke Amazon CloudWatch alarms for any resources that are vulnerable to DDoS attacks. (C) Create an Amazon CloudWatch alarm that monitors Firewall Manager metrics for an active DDoS event. (D) Create an Amazon CloudWatch alarm that monitors Shield Advanced metrics for an active DDoS event. |

23. Click here to View Answer

Answer: D

Explanation:

The correct answer is D because AWS Shield Advanced is specifically designed to protect AWS resources from DDoS attacks and provides detailed metrics related to such attacks. These metrics are readily available in Amazon CloudWatch.

Here’s a detailed justification:

- AWS Shield Advanced’s Role: Shield Advanced offers enhanced DDoS protection compared to the standard AWS Shield. It provides detailed visibility into DDoS attacks targeting protected resources, including metrics and real-time event notifications.https://aws.amazon.com/shield/

- CloudWatch Integration: Shield Advanced integrates seamlessly with Amazon CloudWatch, allowing you to monitor various metrics that indicate DDoS attack activity. These metrics include

DDoSDetected,DDoSTotalRequestCount, andDDoSAttackVectorsspecific to resources protected by Shield Advanced.https://docs.aws.amazon.com/waf/latest/developerguide/ddos-metrics-cw.html - CloudWatch Alarms: By creating CloudWatch alarms that monitor these Shield Advanced metrics, you can be proactively alerted when a DDoS attack is detected. You can configure the alarm to trigger notifications via Amazon SNS, send alerts to security dashboards, or even automate mitigation actions.

- Why other options are incorrect:

- A (Macie): Amazon Macie focuses on discovering and protecting sensitive data, not detecting DDoS attacks. While it analyzes data access patterns, it’s not designed to provide real-time DDoS alerts.

- B (Inspector): Amazon Inspector assesses the security vulnerabilities of EC2 instances and container images. It identifies weaknesses that could be exploited in a DDoS attack, but it doesn’t directly detect active attacks.

- C (Firewall Manager): AWS Firewall Manager provides centralized management of AWS WAF rules and other security policies across multiple accounts. While it plays a role in mitigating DDoS attacks, it doesn’t provide metrics specifically designed for DDoS detection like Shield Advanced does. Firewall Manager usually distributes the shield protections, but cloudwatch alarms for DDoS are configured on Shield metrics.

In summary, leveraging Shield Advanced metrics within CloudWatch alarms provides the most direct and effective method to receive alerts upon the occurrence of DDoS attacks against your AWS account.

| Question.24 A company hosts a web application on an Apache web server. The application runs on Amazon EC2 instances that are in an Auto Scaling group. The company configured the EC2 instances to send the Apache web server logs to an Amazon CloudWatch Logs group that the company has configured to expire after 1 year. Recently, the company discovered in the Apache web server logs that a specific IP address is sending suspicious requests to the web application. A security engineer wants to analyze the past week of Apache web server logs to determine how many requests that the IP address sent and the corresponding URLs that the IP address requested. What should the security engineer do to meet these requirements with the LEAST effort? (A) Export the CloudWatch Logs group data to Amazon S3. Use Amazon Macie to query the logs for the specific IP address and the requested URL. (B) Configure a CloudWatch Logs subscription to stream the log group to an Amazon OpenSearch Service cluster. Use OpenSearch Service to analyze the logs for the specific IP address and the requested URLs. (C) Use CloudWatch Logs Insights and a custom query syntax to analyze the CloudWatch logs for the specific IP address and the requested URLs. (D) Export the CloudWatch Logs group data to Amazon S3. Use AWS Glue to crawl the S3 bucket for only the log entries that contain the specific IP address. Use AWS Glue to view the results. |

24. Click here to View Answer

Answer: C

Explanation:

The security engineer needs to analyze the last week’s worth of Apache web server logs in CloudWatch to identify requests from a specific IP address and the URLs requested. The goal is to achieve this with the least amount of effort.

Option C, using CloudWatch Logs Insights, is the most efficient solution. CloudWatch Logs Insights allows direct querying and analysis of log data within CloudWatch Logs. This eliminates the need for exporting the data to other services. A custom query syntax can be used to filter the logs for the specific IP address and extract the corresponding URLs. This provides a quick and targeted analysis.

Option A involves exporting data to S3 and then using Macie. While Macie can perform data discovery and security risk analysis, it’s primarily designed for sensitive data discovery and not for ad-hoc log analysis like this. Exporting to S3 and then using Macie adds unnecessary complexity and cost.

Option B suggests streaming logs to Amazon OpenSearch Service. While OpenSearch Service is excellent for log analytics, setting up a streaming subscription and configuring OpenSearch Service is an overkill for a one-time analysis of a week’s worth of logs. This approach is more suitable for continuous monitoring and real-time analysis, not for a retrospective investigation.

Option D proposes exporting to S3 and using AWS Glue. Glue is a data catalog and ETL service, suitable for preparing and transforming data for analytics. It is not the most efficient tool for simply searching for specific entries within a log file. While Glue could potentially perform this task, it involves significantly more setup and configuration compared to CloudWatch Logs Insights. Glue is also unnecessary because we are not transforming or enriching the logs.

Therefore, CloudWatch Logs Insights provides the quickest and easiest way to analyze the logs directly, satisfying the “least effort” requirement.

Authoritative links:

CloudWatch Logs Insights: https://docs.aws.amazon.com/AmazonCloudWatch/latest/logs/AnalyzingLogData.html

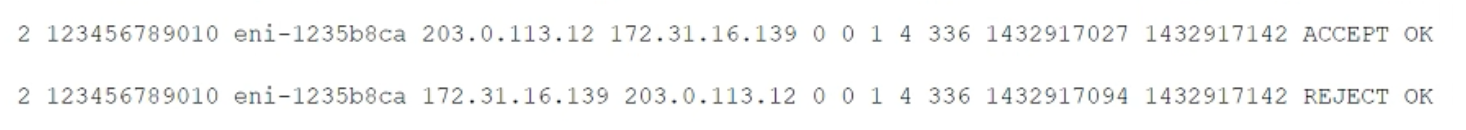

| Question.25 While securing the connection between a company’s VPC and its on-premises data center, a security engineer sent a ping command from an on-premises host (IP address 203.0.113.12) to an Amazon EC2 instance (IP address 172.31.16.139). The ping command did not return a response. The flow log in the VPC showed the following:  What action should be performed to allow the ping to work? (A) In the security group of the EC2 instance, allow inbound ICMP traffic. (B) In the security group of the EC2 instance, allow outbound ICMP traffic. (C) In the VPC’s NACL, allow inbound ICMP traffic. (D) In the VPC’s NACL, allow outbound ICMP traffic. |

25. Click here to View Answer

Answer: D

Explanation:

In the VPC’s NACL, allow outbound ICMP traffic.