| Question.66 A company uses Azure Stream Analytics to monitor devices. The company plans to double the number of devices that are monitored. You need to monitor a Stream Analytics job to ensure that there are enough processing resources to handle the additional load. Which metric should you monitor? A. Early Input Events B. Late Input Events C. Watermark delay D. Input Deserialization Errors |

66. Click here to View Answer

Answer:

C

Explanation:

There are a number of resource constraints that can cause the streaming pipeline to slow down. The watermark delay metric

can rise due to: Not enough processing resources in Stream Analytics to handle the volume of input events.

Not enough throughput within the input event brokers, so they are throttled.

Output sinks are not provisioned with enough capacity, so they are throttled. The possible solutions vary widely based on

the flavor of output service being used.

Incorrect Answers:

A: Deserialization issues are caused when the input stream of your Stream Analytics job contains malformed messages.

Reference:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-time-handling

| Question.67 You are designing an anomaly detection solution for streaming data from an Azure IoT hub. The solution must meet the following requirements: Send the output to Azure Synapse.  Identify spikes and dips in time series data.  Minimize development and configuration effort.  Which should you include in the solution? A. Azure Databricks B. Azure Stream Analytics C. Azure SQL Database |

67. Click here to View Answer

Answer:

B

Explanation:

You can identify anomalies by routing data via IoT Hub to a built-in ML model in Azure Stream Analytics.

Reference:

https://docs.microsoft.com/en-us/learn/modules/data-anomaly-detection-using-azure-iot-hub/

| Question.68 You have an enterprise data warehouse in Azure Synapse Analytics named DW1 on a server named Server1. You need to determine the size of the transaction log file for each distribution of DW1. What should you do? A. On DW1, execute a query against the sys.database_files dynamic management view. B. From Azure Monitor in the Azure portal, execute a query against the logs of DW1. C. Execute a query against the logs of DW1 by using the Get-AzOperationalInsightsSearchResult PowerShell cmdlet. D. On the master database, execute a query against the sys.dm_pdw_nodes_os_performance_counters dynamic management view. |

68. Click here to View Answer

Answer:

A

Explanation:

For information about the current log file size, its maximum size, and the autogrow option for the file, you can also use the

size, max_size, and growth columns for that log file in sys.database_files.

Reference:

https://docs.microsoft.com/en-us/sql/relational-databases/logs/manage-the-size-of-the-transaction-log-file

| Question.69 You are planning a streaming data solution that will use Azure Databricks. The solution will stream sales transaction data from an online store. The solution has the following specifications: The output data will contain items purchased, quantity, line total sales amount, and line total tax amount.  Line total sales amount and line total tax amount will be aggregated in Databricks.  Sales transactions will never be updated. Instead, new rows will be added to adjust a sale.  You need to recommend an output mode for the dataset that will be processed by using Structured Streaming. The solution must minimize duplicate data. What should you recommend? A. Update B. Complete C. Append |

69. Click here to View Answer

Answer:

A

Explanation:

By default, streams run in append mode, which adds new records to the table.

Incorrect Answers:

B: Complete mode: replace the entire table with every batch.

Reference:

https://docs.databricks.com/delta/delta-streaming.html

| Question.70 You use Azure Stream Analytics to receive Twitter data from Azure Event Hubs and to output the data to an Azure Blob storage account. You need to output the count of tweets during the last five minutes every five minutes. Each tweet must only be counted once. Which windowing function should you use? A. a five-minute Sliding window B. a five-minute Session window C. a five-minute Hopping window that has a one-minute hop D. a five-minute Tumbling window |

70. Click here to View Answer

Answer:

D

Explanation:

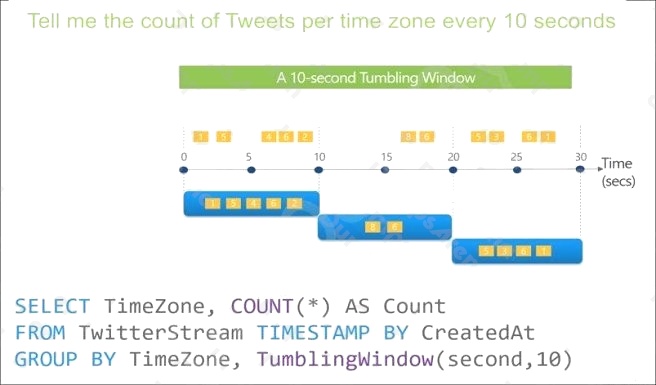

Tumbling window functions are used to segment a data stream into distinct time segments and perform a function against

them, such as the example below. The key differentiators of a Tumbling window are that they repeat, do not overlap, and an

event cannot belong to more than one tumbling window.

Reference:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-window-functions