| Question.11 What is the recommended storage format to use with Spark? (A) JSON (B) XML (C) Apache Parquet (D) None of These |

11. Click here to View Answer

Answer is (C) Apache Parquet

Apache Parquet. Apache Parquet is a highly optimized solution for data storage and is the recommended option for storage.

| Question.12 You have a SQL pool in Azure Synapse. A user reports that queries against the pool take longer than expected to complete. You determine that the issue relates to queried columnstore segments. You need to add monitoring to the underlying storage to help diagnose the issue. Which two metrics should you monitor? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point. (A) Snapshot Storage Size (B) Cache used percentage (C) DWU Limit (D) Cache hit percentage |

12. Click here to View Answer

Answer:

B D

Explanation:

D: Cache hit percentage: (cache hits / cache miss) * 100 where cache hits is the sum of all columnstore segments hits in the

local SSD cache and cache miss is the columnstore segments misses in the local SSD cache summed across all nodes

B: (cache used / cache capacity) * 100 where cache used is the sum of all bytes in the local SSD cache across all nodes and

cache capacity is the sum of the storage capacity of the local SSD cache across all nodes

Incorrect Asnwers:

C: DWU limit: Service level objective of the data warehouse.

Reference: https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-concept-

resource-utilization-query-activity

| Question.13 A company purchases IoT devices to monitor manufacturing machinery. The company uses an Azure IoT Hub to communicate with the IoT devices. The company must be able to monitor the devices in real-time. You need to design the solution. What should you recommend? (A) Azure Analysis Services using Azure Portal (B) Azure Analysis Services using Azure PowerShell (C) Azure Stream Analytics cloud job using Azure Portal (D) Azure Data Factory instance using Microsoft Visual Studio |

13. Click here to View Answer

Answer: C

Explanation:

In a real-world scenario, you could have hundreds of these sensors generating events as a stream. Ideally, a gateway device

would run code to push these events to Azure Event Hubs or Azure IoT Hubs. Your Stream Analytics job would ingest these

events from Event Hubs and run real-time analytics queries against the streams.

Create a Stream Analytics job:

In the Azure portal, select + Create a resource from the left navigation menu. Then, select Stream Analytics job from

Analytics.

Reference:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-get-started-with-azure-stream-analytics-to-

process-data-from-iot-devices

| Question.14 You are designing a star schema for a dataset that contains records of online orders. Each record includes an order date, an order due date, and an order ship date. You need to ensure that the design provides the fastest query times of the records when querying for arbitrary date ranges and aggregating by fiscal calendar attributes. Which two actions should you perform? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point. (A) Create a date dimension table that has a DateTime key. (B) Use built-in SQL functions to extract date attributes. (C) Create a date dimension table that has an integer key in the format of YYYYMMDD. (D) In the fact table, use integer columns for the date fields. (E) Use DateTime columns for the date fields. |

14. Click here to View Answer

Answer: B D

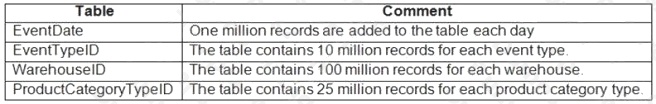

| Question.15 You are designing an inventory updates table in an Azure Synapse Analytics dedicated SQL pool. The table will have a clustered columnstore index and will include the following columns:  You identify the following usage patterns: Analysts will most commonly analyze transactions for a warehouse.  Queries will summarize by product category type, date, and/or inventory event type.  You need to recommend a partition strategy for the table to minimize query times. On which column should you partition the table? (A) EventTypeID (B) ProductCategoryTypeID (C) EventDate (D) WarehouseID |

15. Click here to View Answer

Answer: D

Explanation:

The number of records for each warehouse is big enough for a good partitioning.

Note: Table partitions enable you to divide your data into smaller groups of data. In most cases, table partitions are created

on a date column.

When creating partitions on clustered columnstore tables, it is important to consider how many rows belong to each partition.

For optimal compression and performance of clustered columnstore tables, a minimum of 1 million rows per distribution and

partition is needed. Before partitions are created, dedicated SQL pool already divides each table into 60 distributed

databases.