| Question.26 What should you do to improve high availability of the real-time data processing solution? A. Deploy a High Concurrency Databricks cluster. B. Deploy an Azure Stream Analytics job and use an Azure Automation runbook to check the status of the job and to start the job if it stops. C. Set Data Lake Storage to use geo-redundant storage (GRS). D. Deploy identical Azure Stream Analytics jobs to paired regions in Azure. |

26. Click here to View Answer

Answer: D

Explanation:

Guarantee Stream Analytics job reliability during service updates

Part of being a fully managed service is the capability to introduce new service functionality and improvements at a rapid

pace. As a result, Stream Analytics can have a service update deploy on a weekly (or more frequent) basis. No matter how

much testing is done there is still a risk that an existing, running job may break due to the introduction of a bug. If you are

running mission critical jobs, these risks need to be avoided. You can reduce this risk by following Azures paired region

model.

Scenario: The application development team will create an Azure event hub to receive real-time sales data, including store

number, date, time, product ID, customer loyalty number, price, and discount amount, from the point of sale (POS) system

and output the data to data storage in Azure

Reference:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-job-reliability

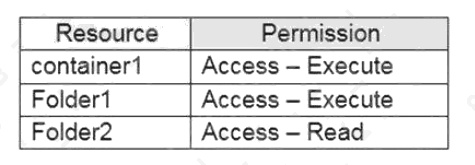

| Question.27 You have an Azure subscription linked to an Azure Active Directory (Azure AD) tenant that contains a service principal named ServicePrincipal1. The subscription contains an Azure Data Lake Storage account named adls1. Adls1 contains a folder named Folder2 that has a URI of https://adls1.dfs.core.windows.net/container1/Folder1/Folder2/. ServicePrincipal1 has the access control list (ACL) permissions shown in the following table.  You need to ensure that ServicePrincipal1 can perform the following actions: Traverse child items that are created in Folder2. Read files that are created in Folder2.   The solution must use the principle of least privilege. Which two permissions should you grant to ServicePrincipal1 for Folder2? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point. A. Access – Read B. Access – Write C. Access – Execute D. Default – Read E. Default – Write F. Default – Execute |

27. Click here to View Answer

Answer:

D F

Explanation:

Execute (X) permission is required to traverse the child items of a folder.

There are two kinds of access control lists (ACLs), Access ACLs and Default ACLs.

Access ACLs: These control access to an object. Files and folders both have Access ACLs.

Default ACLs: A “template” of ACLs associated with a folder that determine the Access ACLs for any child items that are

created under that folder. Files do not have Default ACLs.

Reference:

https://docs.microsoft.com/en-us/azure/data-lake-store/data-lake-store-access-control

| Question.28 You are designing database for an Azure Synapse Analytics dedicated SQL pool to support workloads for detecting ecommerce transaction fraud. Data will be combined from multiple ecommerce sites and can include sensitive financial information such as credit card numbers. You need to recommend a solution that meets the following requirements: Users must be able to identify potentially fraudulent transactions.  Users must be able to use credit cards as a potential feature in models. Users must NOT be able to access the actual   credit card numbers. What should you include in the recommendation? A. Transparent Data Encryption (TDE) B. row-level security (RLS) C. column-level encryption D. Azure Active Directory (Azure AD) pass-through authentication |

28. Click here to View Answer

Answer:

C

Explanation:

Use Always Encrypted to secure the required columns. You can configure Always Encrypted for individual database columns

containing your sensitive data. Always Encrypted is a feature designed to protect sensitive data, such as credit card numbers

or national identification numbers (for example, U.S. social security numbers), stored in Azure SQL Database or SQL Server

databases.

Reference:

https://docs.microsoft.com/en-us/sql/relational-databases/security/encryption/always-encrypted-database-engine

| Question.29 You have an Azure Synapse Analytics dedicated SQL pool that contains a table named Contacts. Contacts contains a column named Phone. You need to ensure that users in a specific role only see the last four digits of a phone number when querying the Phone column. What should you include in the solution? A. table partitions B. a default value C. row-level security (RLS) D. column encryption E. dynamic data masking |

29. Click here to View Answer

Answer:

E

Explanation:

Dynamic data masking helps prevent unauthorized access to sensitive data by enabling customers to designate how much

of the sensitive data to reveal with minimal impact on the application layer. Its a policy-based security feature that hides the

sensitive data in the result set of a query over designated database fields, while the data in the database is not changed.

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/dynamic-data-masking-overview

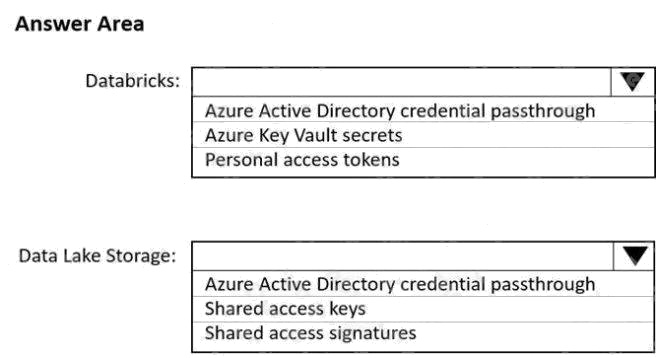

| Question.30 HOTSPOT You use Azure Data Lake Storage Gen2 to store data that data scientists and data engineers will query by using Azure Databricks interactive notebooks. Users will have access only to the Data Lake Storage folders that relate to the projects on which they work. You need to recommend which authentication methods to use for Databricks and Data Lake Storage to provide the users with the appropriate access. The solution must minimize administrative effort and development effort. Which authentication method should you recommend for each Azure service? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:  |

30. Click here to View Answer

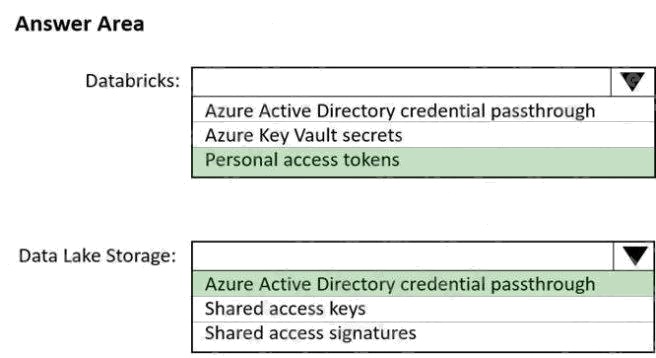

Answer:

Explanation:

Box 1: Personal access tokens

You can use storage shared access signatures (SAS) to access an Azure Data Lake Storage Gen2 storage account directly.

With SAS, you can restrict access to a storage account using temporary tokens with fine-grained access control.

You can add multiple storage accounts and configure respective SAS token providers in the same Spark session.

Box 2: Azure Active Directory credential passthrough

You can authenticate automatically to Azure Data Lake Storage Gen1 (ADLS Gen1) and Azure Data Lake Storage Gen2

(ADLS Gen2) from Azure Databricks clusters using the same Azure Active Directory (Azure AD) identity that you use to log

into Azure Databricks. When you enable your cluster for Azure Data Lake Storage credential passthrough, commands that

you run on that cluster can read and write data in Azure Data Lake Storage without requiring you to configure service

principal credentials for access to storage.

After configuring Azure Data Lake Storage credential passthrough and creating storage containers, you can access data

directly in Azure Data Lake Storage Gen1 using an adl:// path and Azure Data Lake Storage Gen2 using an abfss:// path:

Reference:

https://docs.microsoft.com/en-us/azure/databricks/data/data-sources/azure/adls-gen2/azure-datalake-gen2-sas-access

https://docs.microsoft.com/en-us/azure/databricks/security/credential-passthrough/adls-passthrough