| Question.31 You are developing an application that uses Azure Data Lake Storage Gen2. You need to recommend a solution to grant permissions to a specific application for a limited time period. What should you include in the recommendation? A. role assignments B. shared access signatures (SAS) C. Azure Active Directory (Azure AD) identities D. account keys |

31. Click here to View Answer

Answer: B

Explanation:

A shared access signature (SAS) provides secure delegated access to resources in your storage account. With a SAS, you

have granular control over how a client can access your data. For example:

What resources the client may access.

What permissions they have to those resources. How long the SAS is valid.

Reference: https://docs.microsoft.com/en-us/azure/storage/common/storage-sas-overview

| Question.32 You are designing an Azure Synapse solution that will provide a query interface for the data stored in an Azure Storage account. The storage account is only accessible from a virtual network. You need to recommend an authentication mechanism to ensure that the solution can access the source data. What should you recommend? A. a managed identity B. anonymous public read access C. a shared key |

32. Click here to View Answer

Answer:

A

Explanation:

Managed Identity authentication is required when your storage account is attached to a VNet.

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/quickstart-bulk-load-copy-tsql-examples

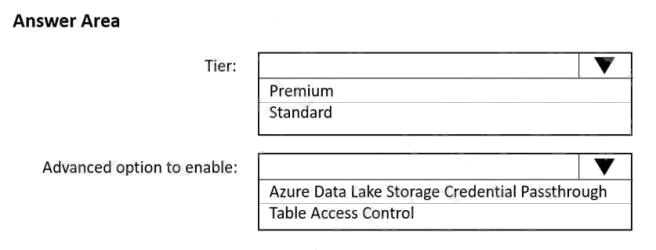

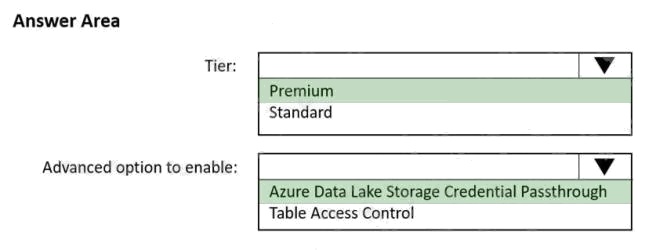

| Question.33 HOTSPOT You need to implement an Azure Databricks cluster that automatically connects to Azure Data Lake Storage Gen2 by using Azure Active Directory (Azure AD) integration. How should you configure the new cluster? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:  |

33. Click here to View Answer

Answer:

Explanation:

Box 1: Premium

Credential passthrough requires an Azure Databricks Premium Plan

Box 2: Azure Data Lake Storage credential passthrough

You can access Azure Data Lake Storage using Azure Active Directory credential passthrough.

When you enable your cluster for Azure Data Lake Storage credential passthrough, commands that you run on that cluster

can read and write data in Azure Data Lake Storage without requiring you to configure service principal credentials for

access to storage.

Reference:

https://docs.microsoft.com/en-us/azure/databricks/security/credential-passthrough/adls-passthrough

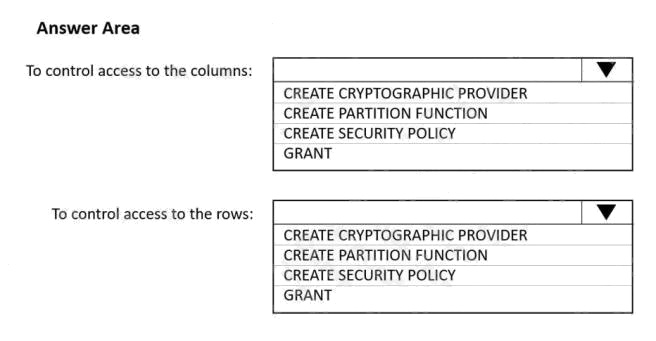

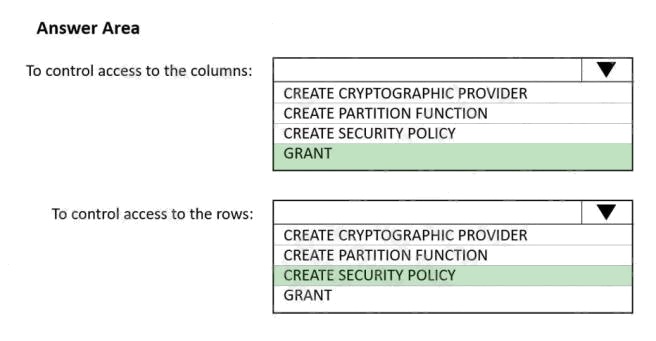

| Question.34 HOTSPOT You have an Azure Synapse Analytics SQL pool named Pool1. In Azure Active Directory (Azure AD), you have a security group named Group1. You need to control the access of Group1 to specific columns and rows in a table in Pool1. Which Transact-SQL commands should you use? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:  |

34. Click here to View Answer

Answer:

Explanation:

Box 1: GRANT

You can implement column-level security with the GRANT T-SQL statement. With this mechanism, both SQL and Azure

Active Directory (Azure AD) authentication are supported.

Box 2: CREATE SECURITY POLICY

Implement RLS by using the CREATE SECURITY POLICY Transact-SQL statement, and predicates created as inline table-

valued functions.

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/column-level-security

https://docs.microsoft.com/en-us/sql/relational-databases/security/row-level-security

| Question.35 You have an Azure Data Lake Storage Gen2 account named adls2 that is protected by a virtual network. You are designing a SQL pool in Azure Synapse that will use adls2 as a source. What should you use to authenticate to adls2? A. an Azure Active Directory (Azure AD) user B. a shared key C. a shared access signature (SAS) D. a managed identity |

35. Click here to View Answer

Answer:

D

Explanation:

Managed Identity authentication is required when your storage account is attached to a VNet.

Reference: https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/quickstart-bulk-load-copy-tsql-

examples